安装PCL

PCL(Point Cloud Library)是在吸收了前人点云相关研究基础上建立起来的大型跨平台开源C++编程库,它实现了大量点云相关的通用算法和高效数据结构,涉及到点云获取、滤波、分割、配准、检索、特征提取、识别、追踪、曲面重建、可视化等。

具体安装过程不在赘述,大致安装过程如下:

1.安装各种依赖库

2.Qt4切换到Qt5

1

2

3

| qmake -v

cd /usr/lib/x86_64-linux-gnu/qt-default/qtchooser

sudo gedit default.conf

|

将里面的Qt4目录改为QT5的安装目录

3.通过编译源码安装VTK7.1.1,需要cmake gui

4.推荐直接通过命令行安装,通过源码安装依赖太多编译不容易通过

(通过命令行安装的PCL版本是1.7.2)

安装好后可以找个例程测试一下pcl visualizer能否正常显示

相关例程及cmakelist百度云:https://pan.baidu.com/s/1hZU0FzA3iyXTCS-AHXWS1Q

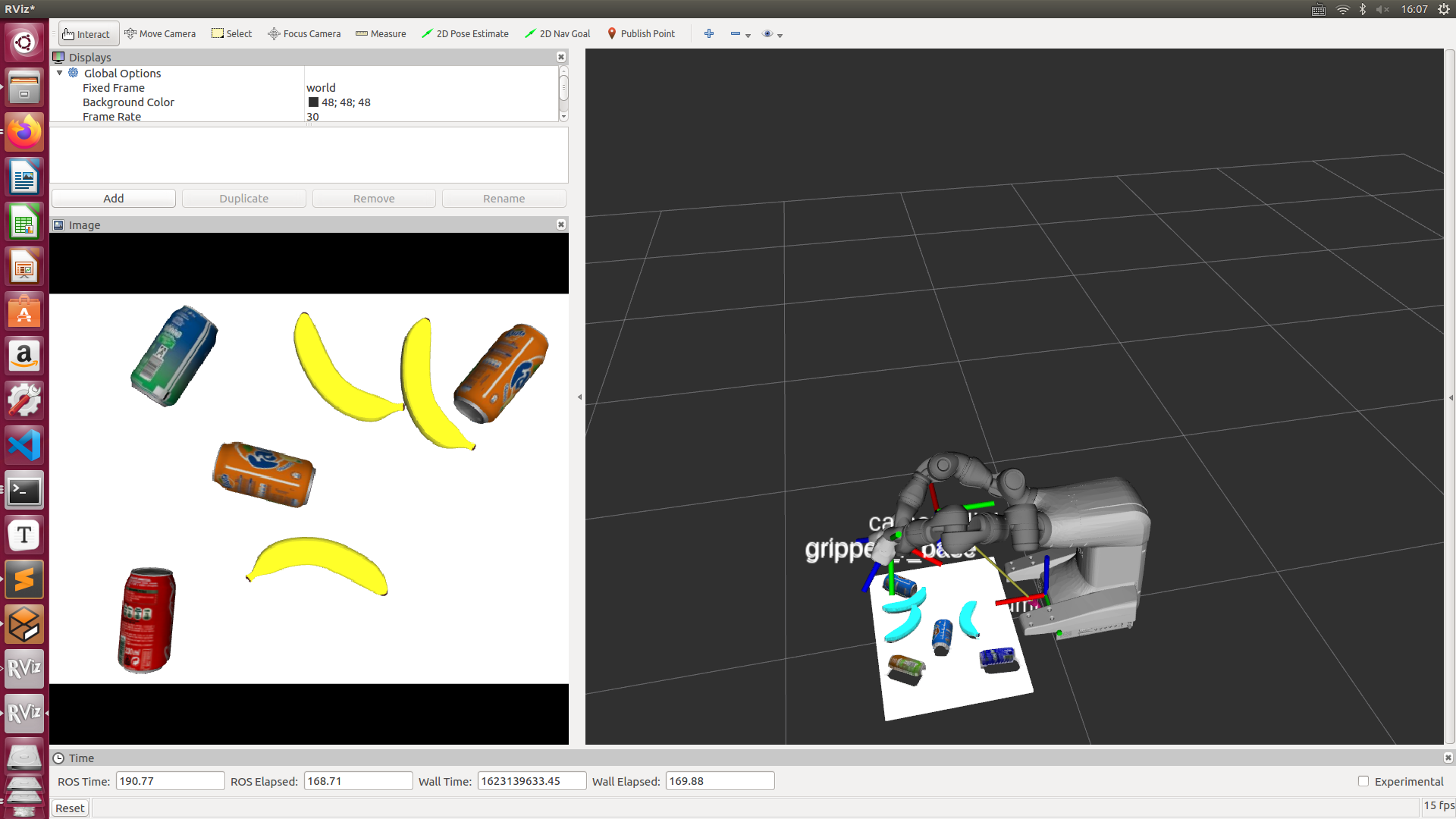

搭建环境

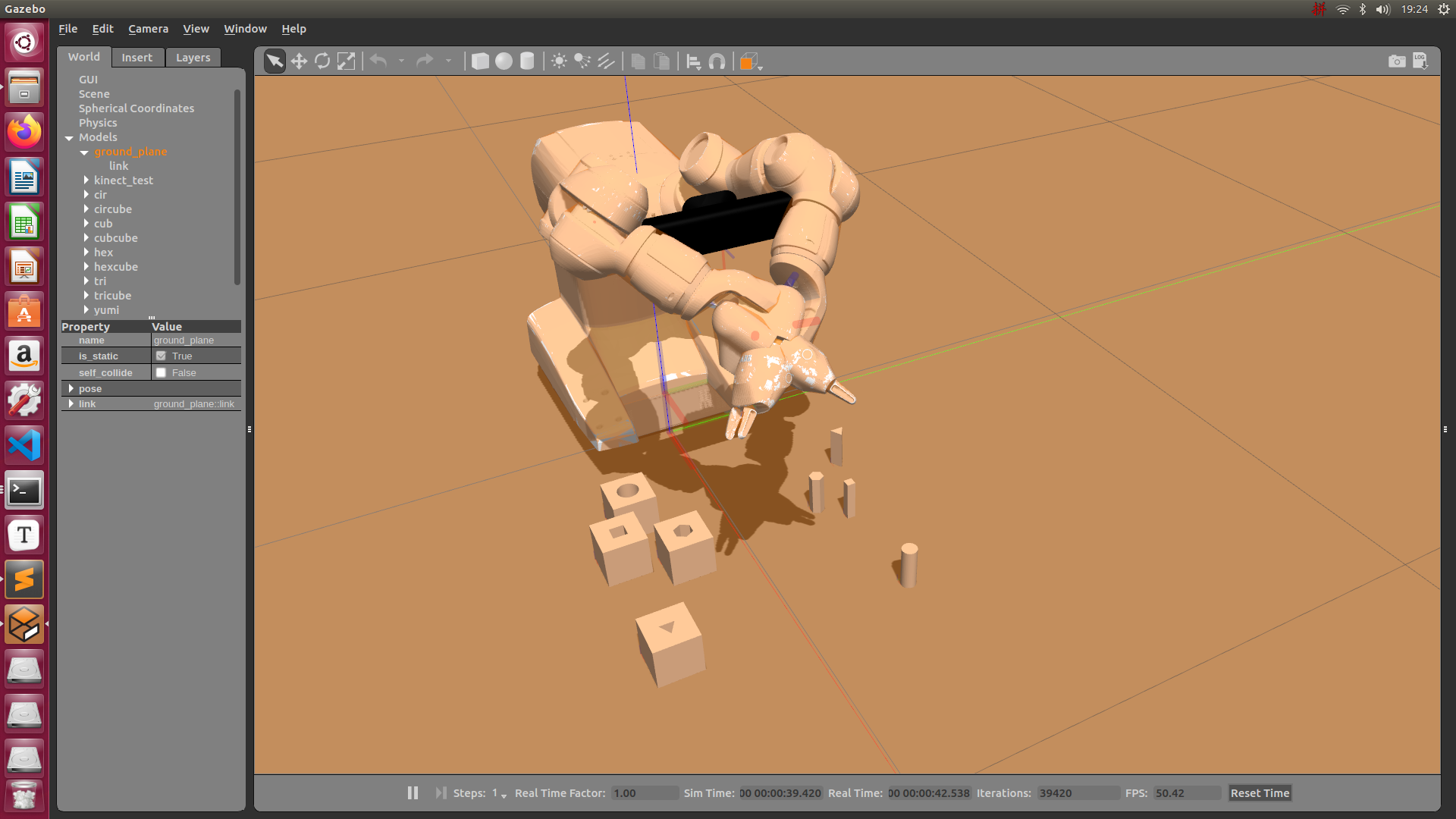

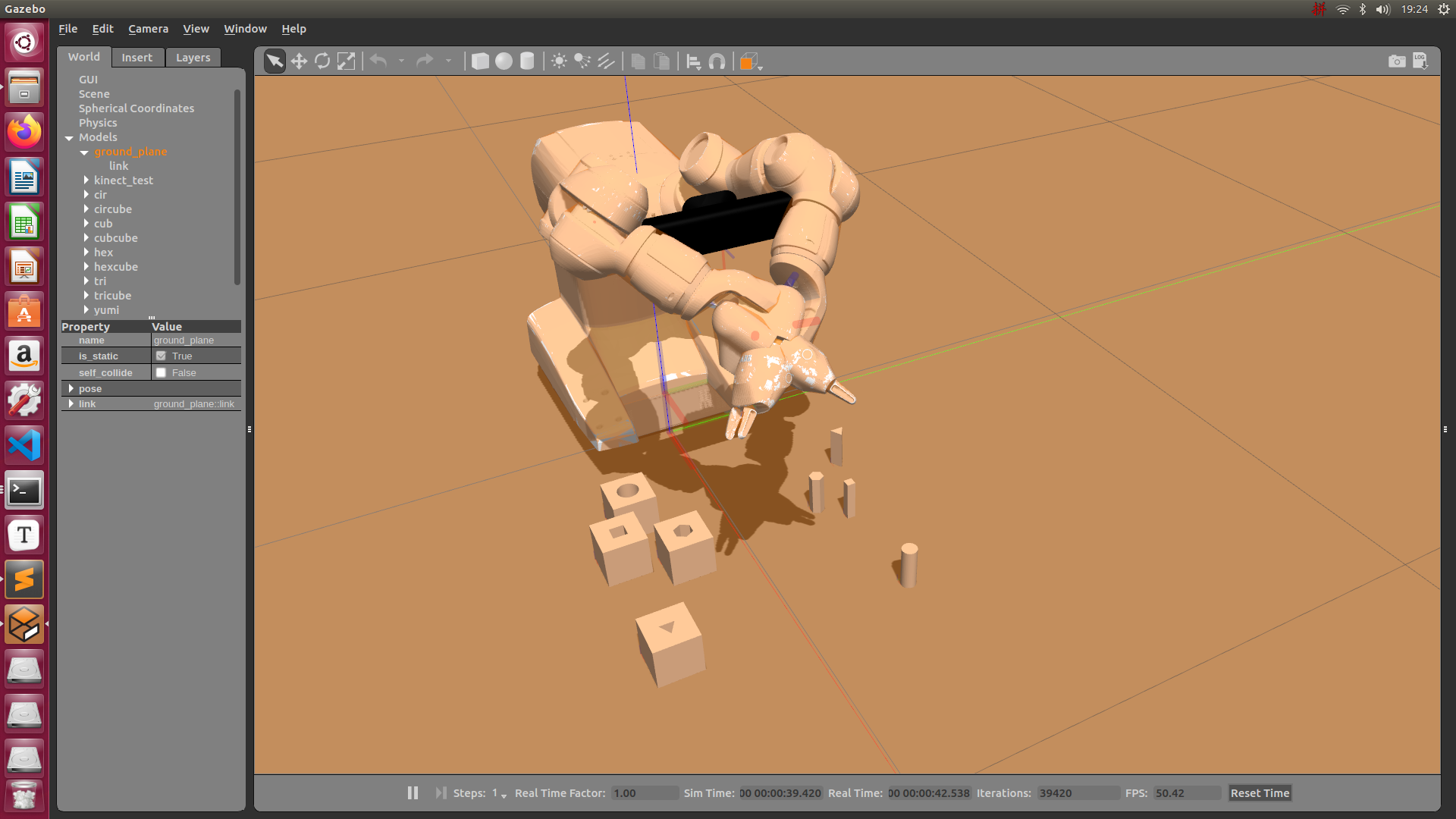

该world在原来食品分类的基础上修改得到

工件STL模型百度云:https://pan.baidu.com/s/13mkX0bAiHVC86QqyWYthbQ

由于相机畸变等因素,对于较长的柱状工件,其侧面在点云中或是RGB图像中对位姿估计的干扰都太大,因此为了 简化任务量,索性将任务改为将正方体工件装配到固定位置的柱状工件上面

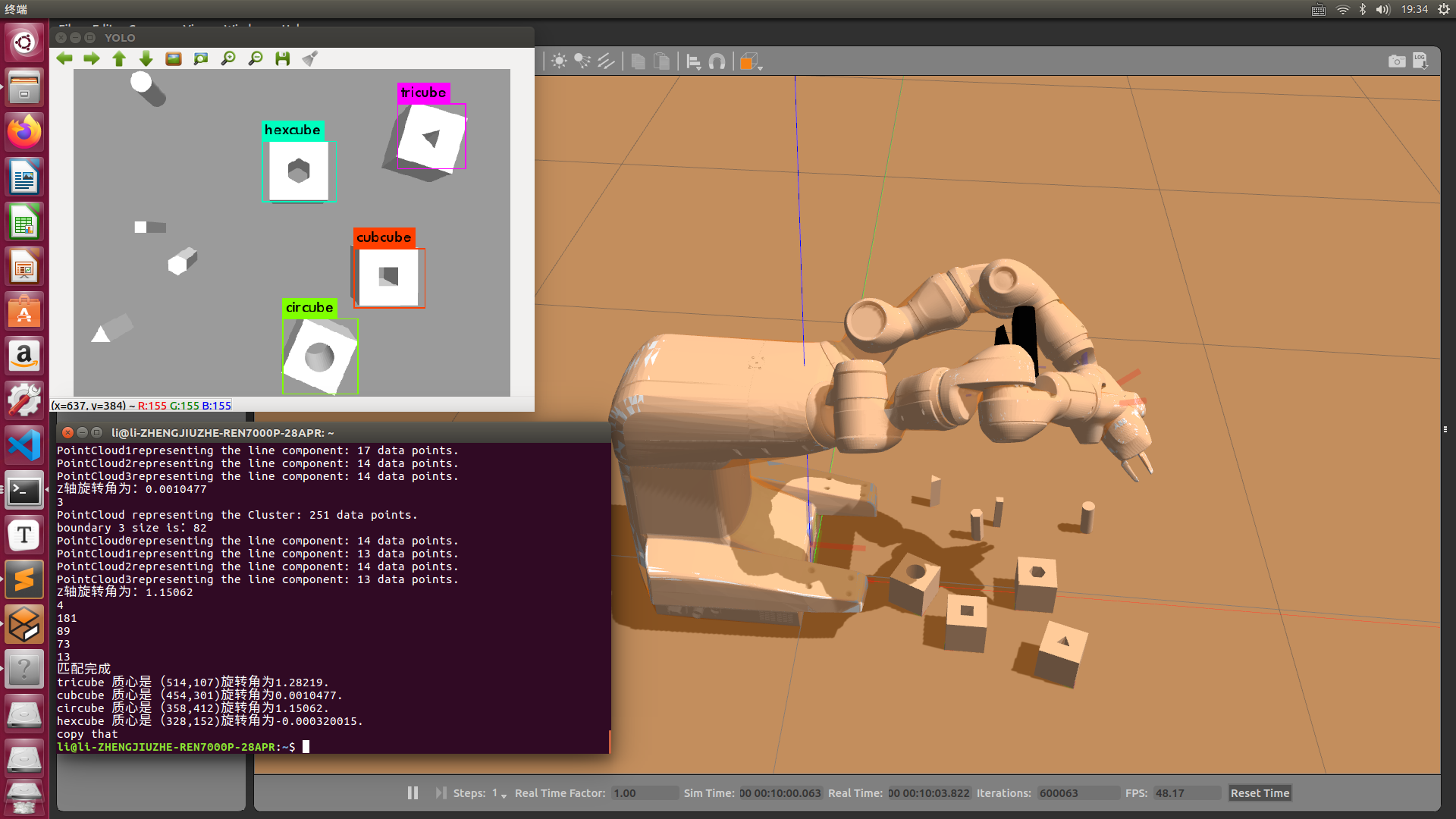

位姿估计

- 位姿估计采用点云进行欧几里得聚类和RANSAC拟合直线的方法;

- 至于正方体的分类并没有采用RGB图像进行边缘检测和多边形逼近,而是采用了深度神经网络进行分类再将分类的预测框中心和各个聚类的中心进行匹配从而得出各个聚类对应的工件名称

点云处理

创建功能包

1

2

| catkin_create_pkg pclproc pcl_conversions pcl_ros pcl_msgs roscpp rospy std_msgs darknet_ros_msgs sensor_msgs

catkin_make -DCATKIN_WHITELIST_PACKAGES="pclproc"

|

1.格式转换

由 sensor_msgs::PCLPointCloud2转换为 pcl::PointCloud<pcl::PointXYZ>

具体方法方法可参考(PCL中点云数据格式之间的转化 - Being_young - 博客园 (cnblogs.com))

void pcl::toROSMsg(const pcl::PointCloud<T> &, sensor_msgs::PointCloud2 &);

2.直通滤波

对于在空间分布有一定空间特征的点云数据,比如使用线结构光扫描的方式采集点云,沿z向分布较广,但x,y向的分布处于有限范围内。此时可使用直通滤波器,确定点云在x或y方向上的范围,可较快剪除离群点,达到第一步粗处理的目的。

1

2

3

4

5

6

7

8

| cout << " Cloud before filtering: " << cloud->points.size() << endl;

pcl::PassThrough<pcl::PointXYZ> pass;

pass.setInputCloud(cloud);

pass.setFilterFieldName("z");

pass.setFilterLimits(0.49, 0.52);

pass.setNegative(false);

pass.filter(*cloudf);

cout << "Cloud after filtering: " << cloudf->points.size() << endl;

|

3.体素栅格下采样

由于点云的海量和无序性,直接处理的方式在对邻域进行搜索时需要较高的计算成本。一个常用的解决方式就是对点云进行下采样,将对全部点云的操作转换到下采样所得到的点上,降低计算量;所谓体素栅格是将点云空间划分为一个个极小的格子,格子里包含几个点。对体素栅格里面的点取平均或者加权平均,得到一个点,代替原来的几个点。显然,栅格选的越大,滤波后点云点数越少,速度快,但是会对原来的点云过度模糊;栅格选的越小,作用反之。

1

2

3

4

5

6

7

8

9

| std::cout << "PointCloud before sampling has: " << cloudf->points.size () << " data points." << std::endl;

pcl::VoxelGrid<pcl::PointXYZ> vg;

pcl::PointCloud<pcl::PointXYZ>::Ptr cloud_filtered (new pcl::PointCloud<pcl::PointXYZ>);

vg.setInputCloud (cloudf);

vg.setLeafSize (0.005f, 0.005f, 0.005f);

vg.filter (*cloud_filtered);

std::cout << "PointCloud after sampling has: " << cloud_filtered->points.size () << " data points." << std::endl;

|

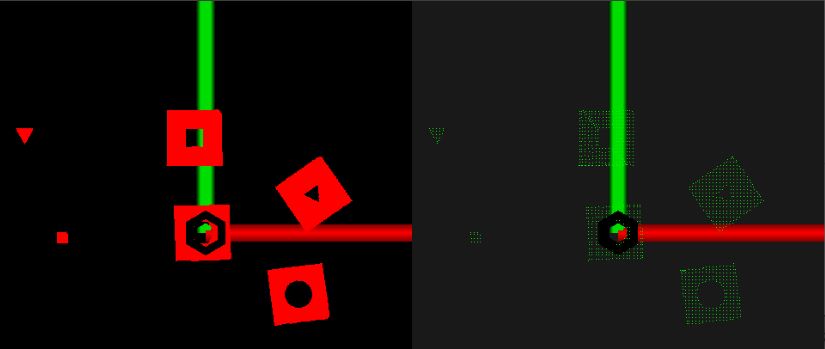

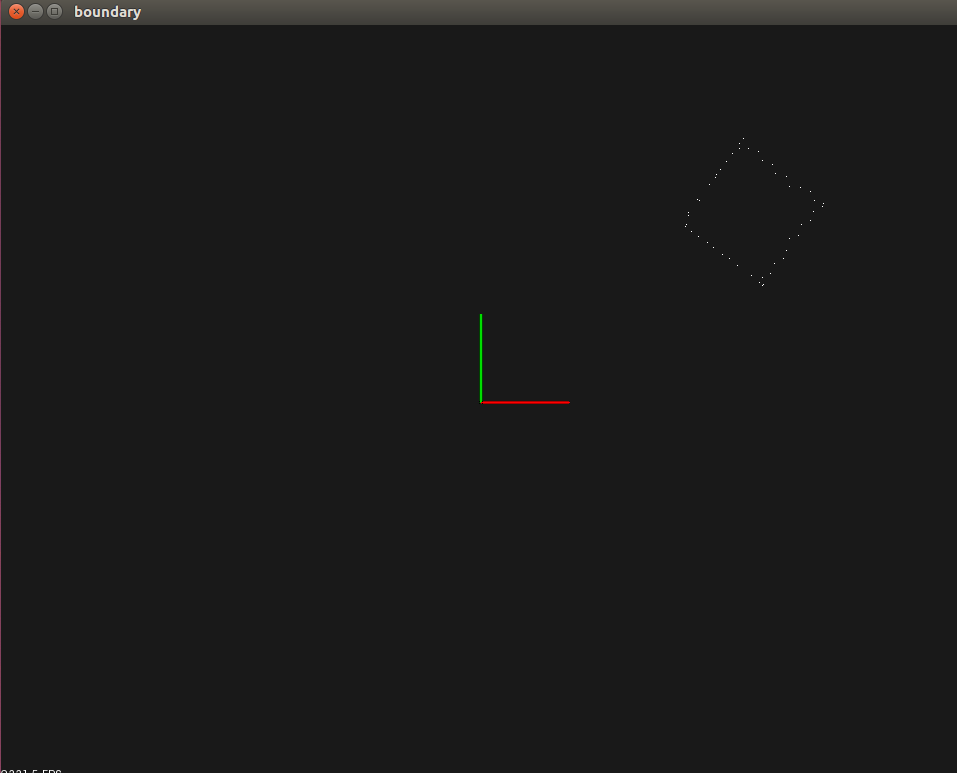

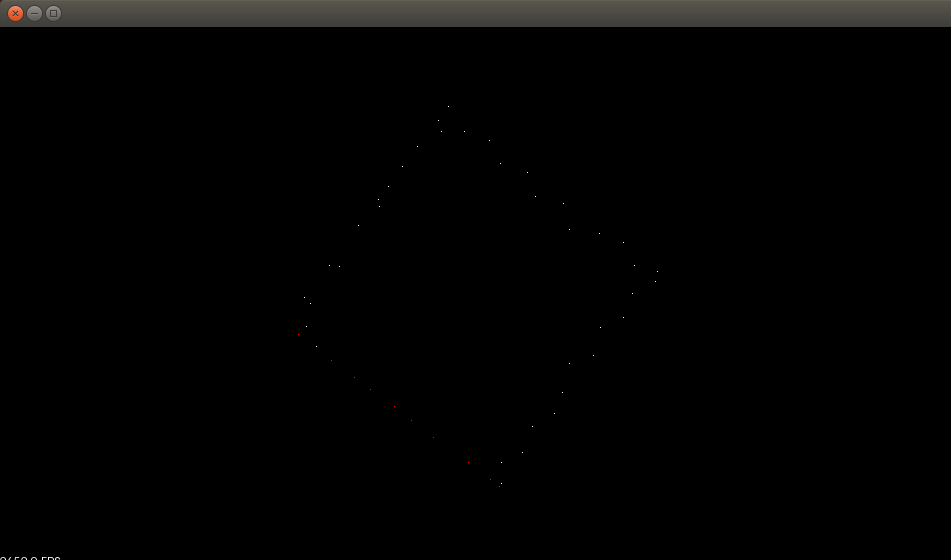

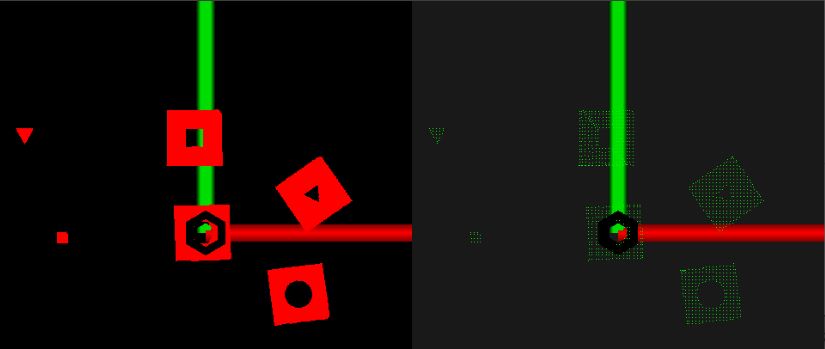

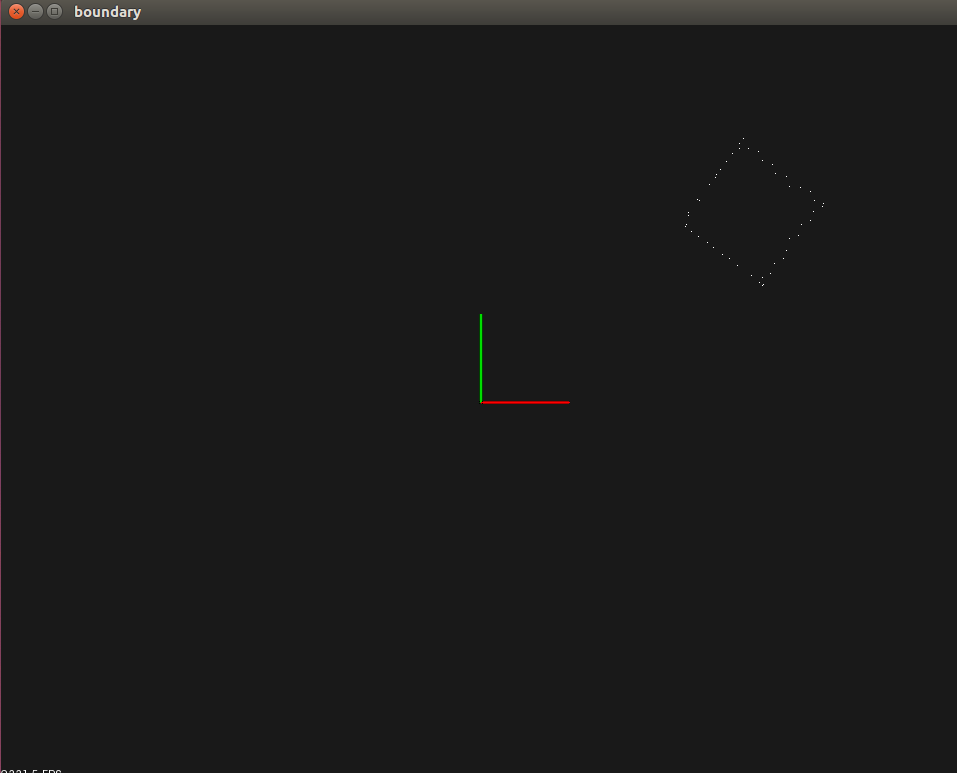

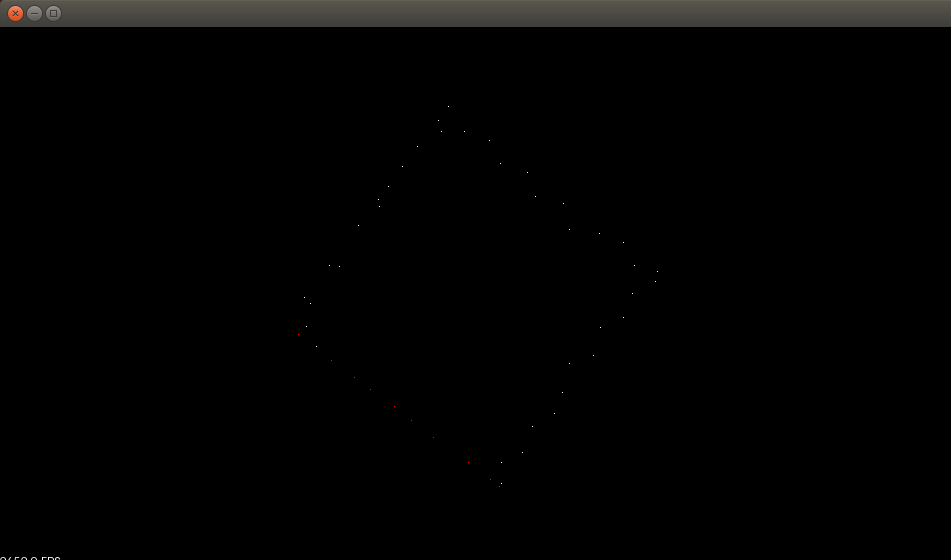

左图为直通滤波,右图为下采样

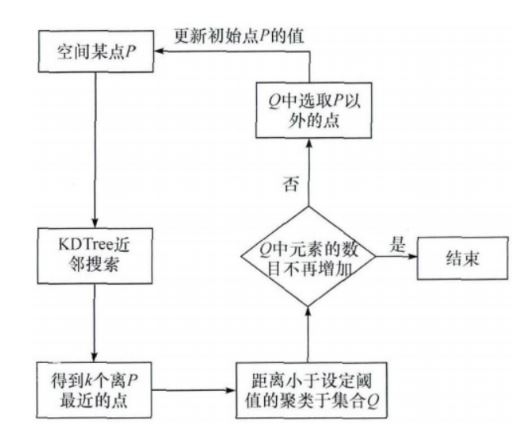

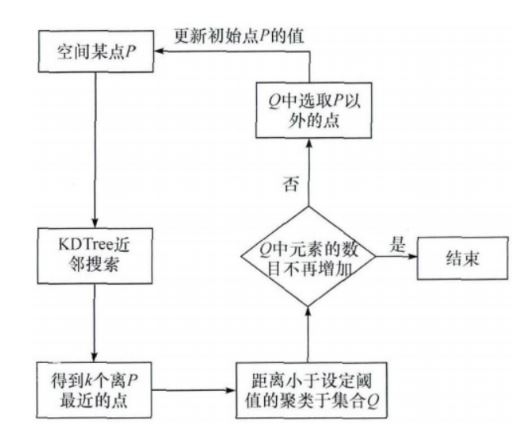

4.欧几里得聚类

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

pcl::search::KdTree<pcl::PointXYZ>::Ptr tree (new pcl::search::KdTree<pcl::PointXYZ>);

tree->setInputCloud (cloud_filtered);

pcl::PCDWriter writer;

std::vector<pcl::PointIndices> cluster_indices;

pcl::EuclideanClusterExtraction<pcl::PointXYZ> ec;

ec.setClusterTolerance (0.015);

ec.setMinClusterSize (100);

ec.setMaxClusterSize (25000);

ec.setSearchMethod (tree);

ec.setInputCloud (cloud_filtered);

ec.extract (cluster_indices);

int j = 0;

for (std::vector<pcl::PointIndices>::const_iterator it = cluster_indices.begin (); it != cluster_indices.end (); ++it)

{

pcl::PointCloud<pcl::PointXYZ>::Ptr cloud_cluster (new pcl::PointCloud<pcl::PointXYZ>);

for (std::vector<int>::const_iterator pit = it->indices.begin (); pit != it->indices.end (); ++pit)

cloud_cluster->points.push_back (cloud_filtered->points[*pit]);

cloud_cluster->width = cloud_cluster->points.size ();

cloud_cluster->height = 1;

cloud_cluster->is_dense = true;

std::cout << "PointCloud representing the Cluster: " << cloud_cluster->points.size () << " data points." << std::endl;

std::stringstream ss;

ss << "cloud_cluster_" << j << ".pcd";

writer.write<pcl::PointXYZ> (ss.str (), *cloud_cluster, false);

}

|

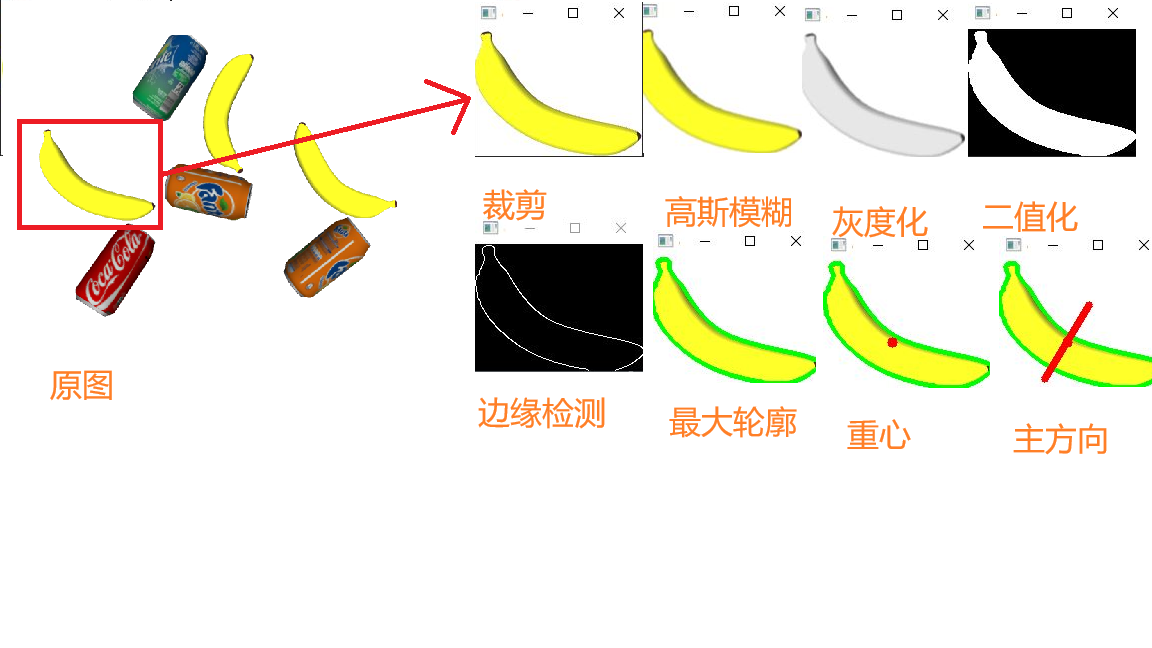

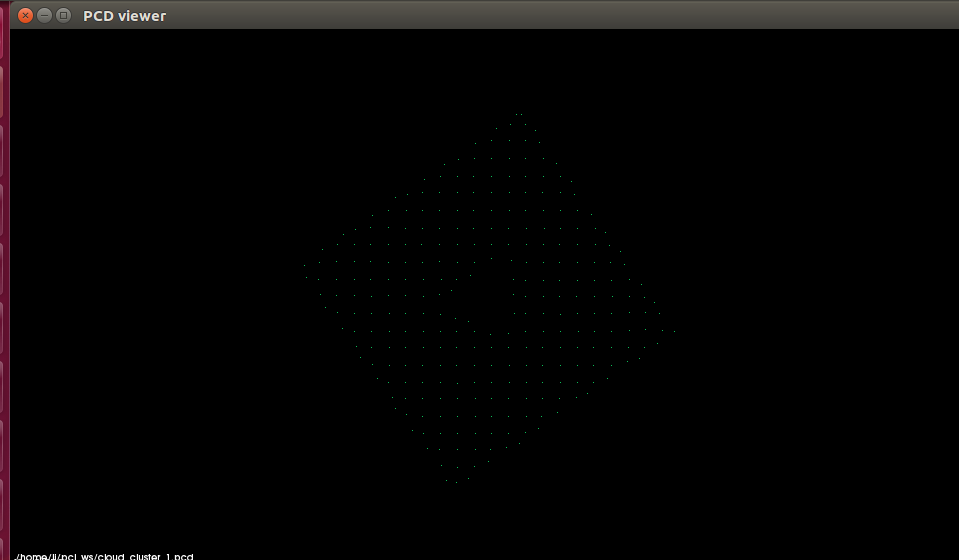

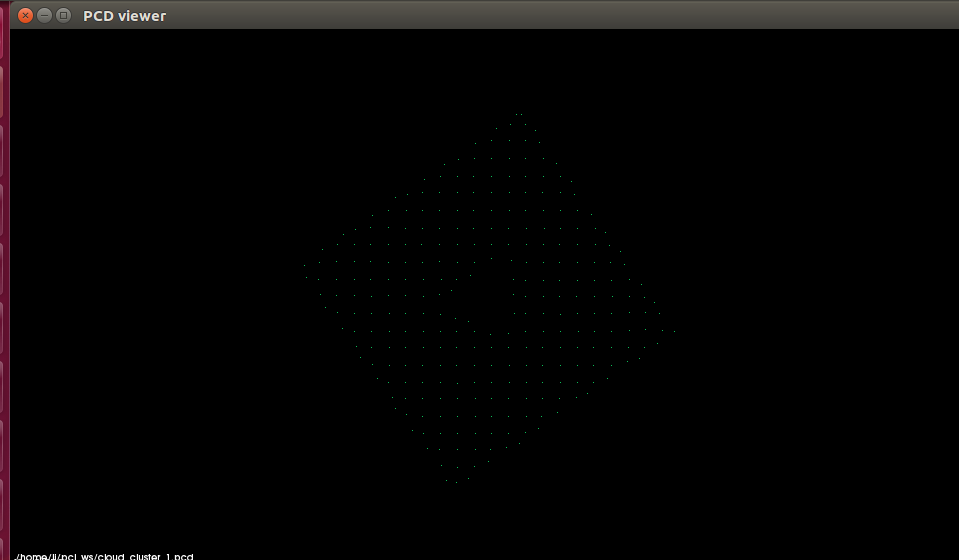

5.边缘提取与质心估计

对点云数据集的每个点的法线估计,可以看作是对表面法线的近似推断,从原始点云上计算出法线,再由法线结合数据估计出边界。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

|

pcl::PointCloud<pcl::Normal>::Ptr normals(new pcl::PointCloud<pcl::Normal>);

pcl::PointCloud<pcl::Boundary> boundaries;

pcl::BoundaryEstimation<pcl::PointXYZ, pcl::Normal, pcl::Boundary> est;

pcl::search::KdTree<pcl::PointXYZ>::Ptr tree2(new pcl::search::KdTree<pcl::PointXYZ>());

pcl::NormalEstimationOMP<pcl::PointXYZ, pcl::Normal> normEst;

normEst.setNumberOfThreads(12);

normEst.setInputCloud(cloud_cluster);

normEst.setSearchMethod(tree2);

normEst.setRadiusSearch(0.02);

normEst.compute(*normals);

est.setInputCloud(cloud_cluster);

est.setInputNormals(normals);

est.setSearchMethod(tree2);

est.setKSearch(500);

est.compute(boundaries);

pcl::PointCloud<pcl::PointXYZ>::Ptr boundPoints(new pcl::PointCloud<pcl::PointXYZ>);

pcl::PointCloud<pcl::PointXYZ> noBoundPoints;

int countBoundaries = 0;

for (int i = 0; i<cloud_cluster->size(); i++)

{

uint8_t x = (boundaries.points[i].boundary_point);

int a = static_cast<int>(x);

if (a == 1)

{

(*boundPoints).push_back(cloud_cluster->points[i]);

countBoundaries++;

}

else

noBoundPoints.push_back(cloud_cluster->points[i]);

}

std::cout << "boundary "<<j<<" size is:" << countBoundaries << std::endl;

|

质心估计

1

2

| Eigen::Vector4f centroid;

pcl::compute3DCentroid(*boundPoints, centroid);

|

得到的centroid[]是一个长度为3的数组

6.RANSAC拟合边缘直线

RANSAC(RAndom SAmple Consensus,随机采样一致)算法是从一组含有“外点”(outliers)的数据中正确估计数学模型参数的迭代算法,一直迭代到估计出认为比较好的模型。

具体的实现步骤可以分为以下几步:

- 选择出可以估计出模型的最小数据集;(对于直线拟合来说就是两个点,对于计算Homography矩阵就是4个点)

- 使用这个数据集来计算出数据模型;

- 将所有数据带入这个模型,计算出“内点”的数目;(累加在一定误差范围内的适合当前迭代推出模型的数据)

- 比较当前模型和之前推出的最好的模型的“内点“的数量,记录最大“内点”数的模型参数和“内点”数;

- 重复1-4步,直到迭代结束或者当前模型已经足够好了(“内点数目大于一定数量”)。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

|

double list_dir[2];

double list_pre_dir[4];

Eigen::Vector4f centroid;

pcl::compute3DCentroid(*boundPoints, centroid);

pcl::ModelCoefficients::Ptr coefficients(new pcl::ModelCoefficients);

pcl::PointIndices::Ptr inliers(new pcl::PointIndices);

pcl::SACSegmentation<pcl::PointXYZ> seg2;

seg2.setOptimizeCoefficients(true);

seg2.setModelType(pcl::SACMODEL_LINE);

seg2.setMethodType(pcl::SAC_RANSAC);

seg2.setDistanceThreshold(0.001);

for(int i=0;i<4;i++)

{

pcl::PointCloud<pcl::PointXYZ>::Ptr cloud_line(new pcl::PointCloud<pcl::PointXYZ>);

pcl::PointCloud<pcl::PointXYZ>::Ptr cloud_out_line(new pcl::PointCloud<pcl::PointXYZ>);

seg2.setInputCloud(boundPoints);

seg2.segment(*inliers, *coefficients);

list_pre_dir[i]=coefficients->values[4]/coefficients->values[3];

pcl::ExtractIndices<pcl::PointXYZ> extract;

extract.setInputCloud (boundPoints);

extract.setIndices (inliers);

extract.setNegative (false);

extract.filter (*cloud_line);

std::cout << "PointCloud"<<i<< "representing the line component: " << cloud_line->points.size () << " data points." << std::endl;

extract.setNegative (true);

extract.filter (*cloud_out_line);

*boundPoints = *cloud_out_line;

}

|

由于正方形边缘具有四条边,每拟合出一条边余下的点便继续用于直线拟合,最后会拟合出四条直线,根据直线的前两个方向向量之比估算出直线斜率,四条直线斜率符号相同的两两之和取平均,便有了工件坐标系的x,y轴分量

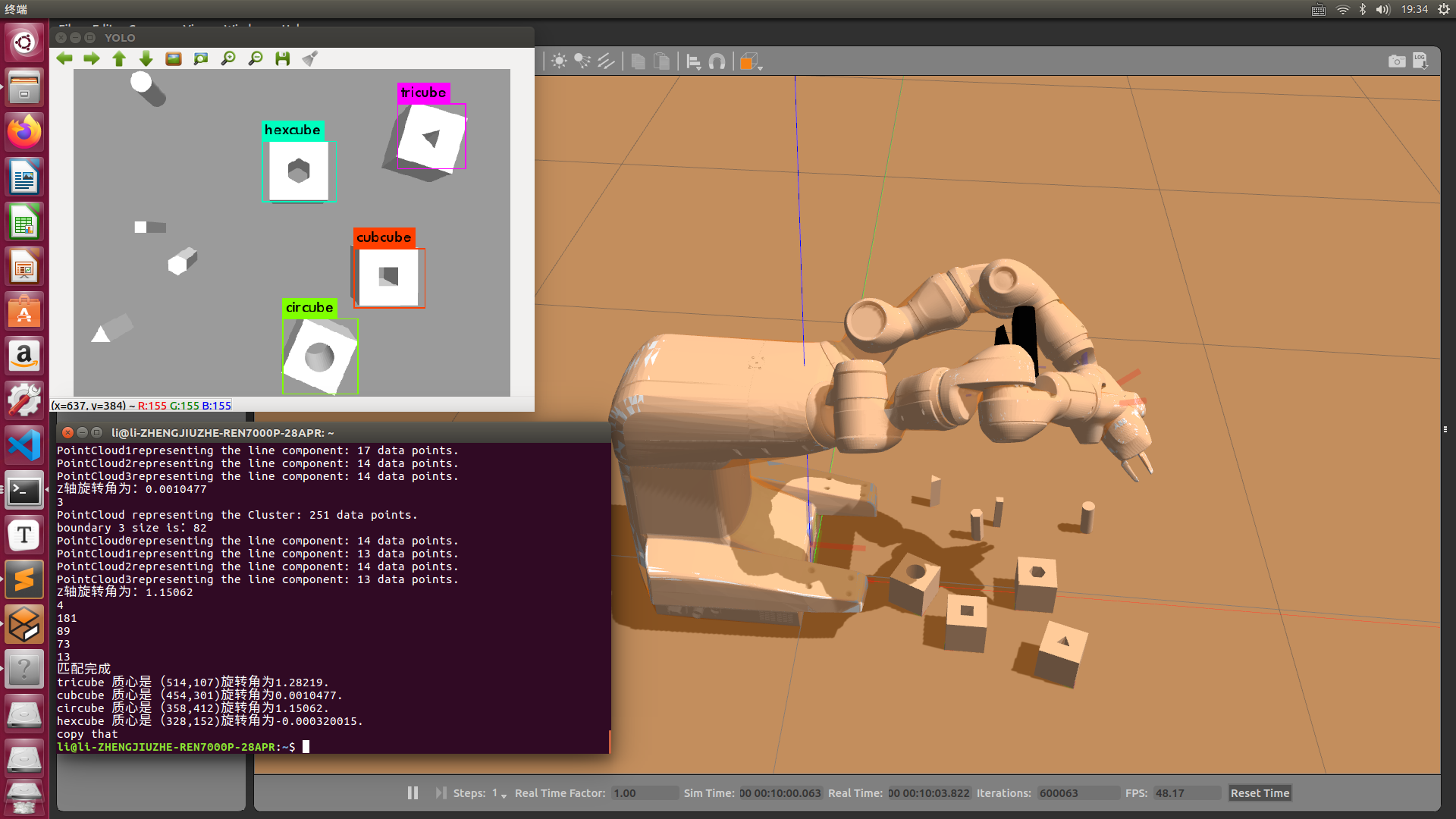

7.工件分类

对于分类问题,尝试过1.用聚类中的点云数目比较大小,2.用上表面的高度比较大小—-但是效果都不太好

3.决定采用yolo进行分类,然后将预测框与之前点云估计出的质心相匹配,为什么这一步是跟pointcloud结合而不是rgb图像?1.正方体侧面干扰太大2.正方体是规则几何体,主方向容易取到对角线

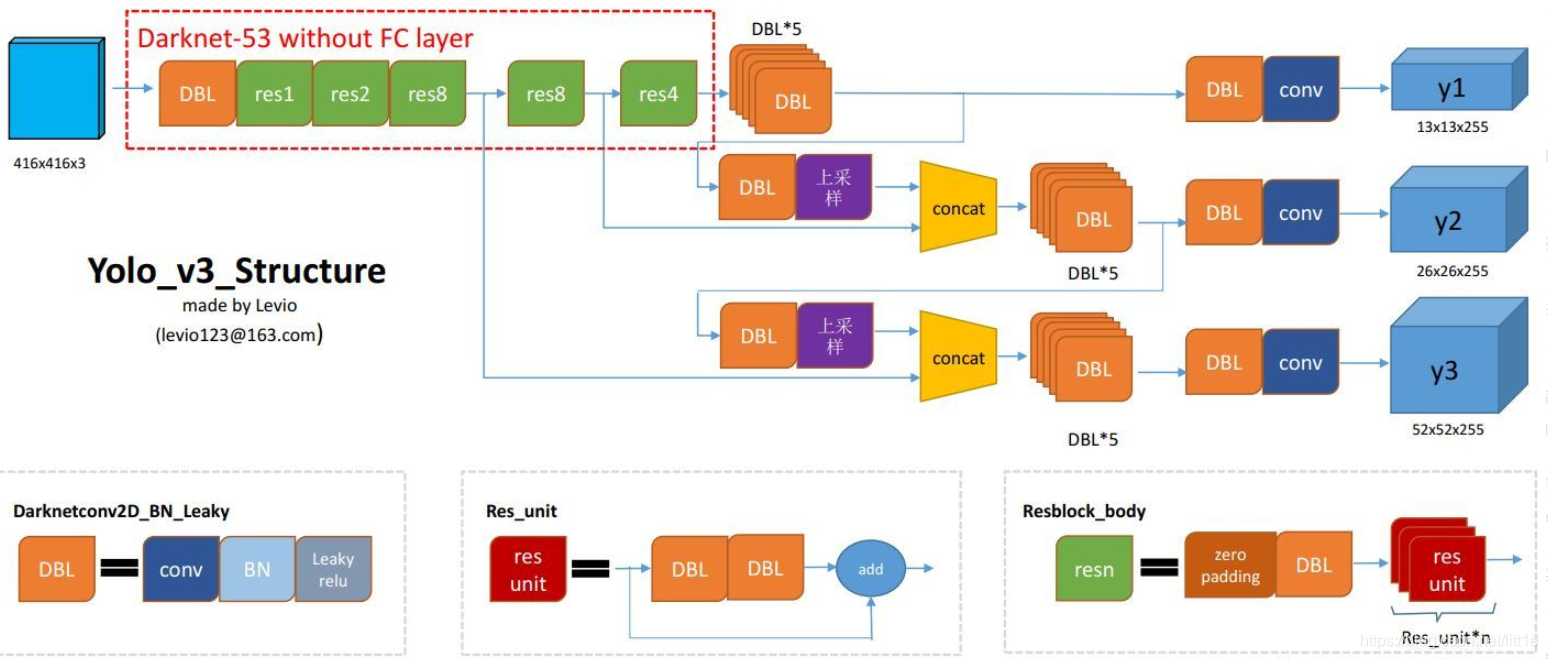

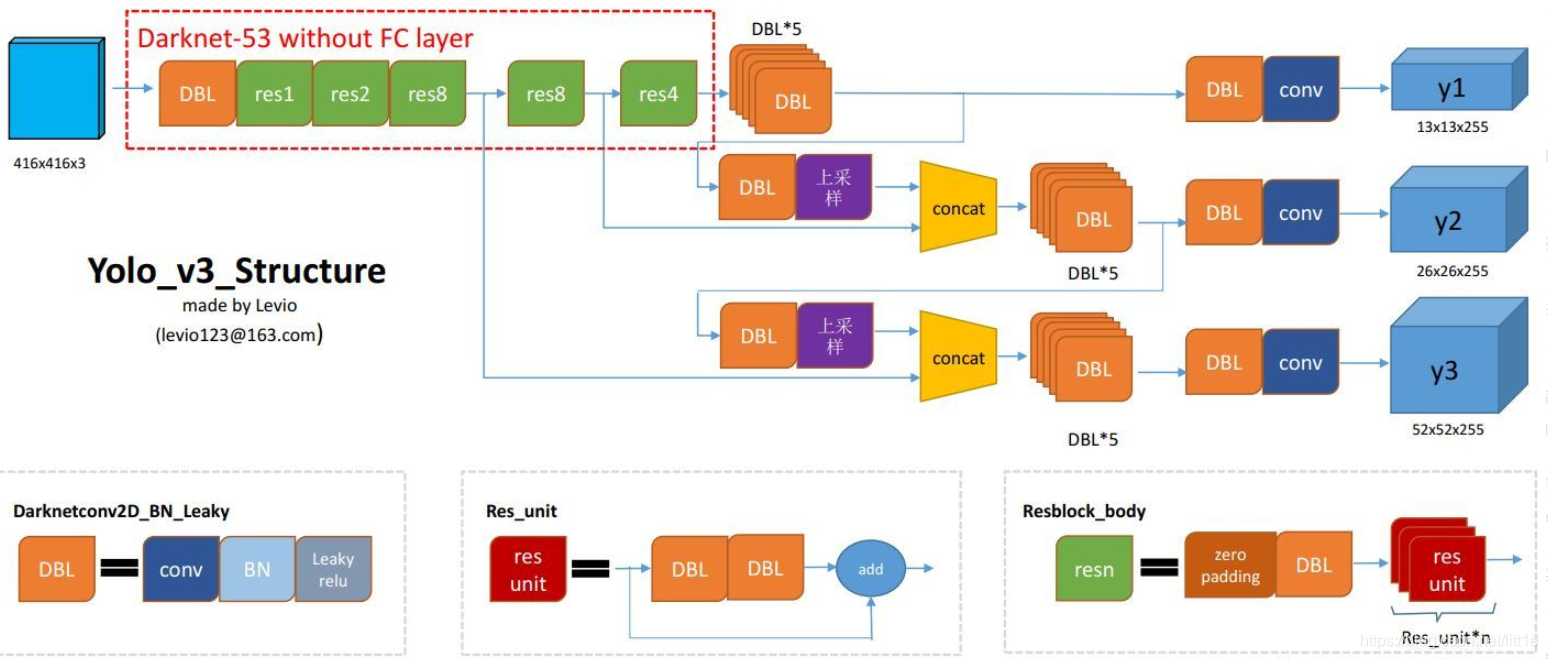

采用yolo-v3的ros版本—darknet_ros,实现视频流的检测与分类

数据集百度云:链接:https://pan.baidu.com/s/11uRc4wOMqCsnLiakXJDeqg

复制这段内容后打开百度网盘手机App,操作更方便哦—来自百度网盘超级会员V1的分享

权重文件百度云:链接:https://pan.baidu.com/s/1mz-b-k-9gyn-ViGrn6yI6g

提取码:1234

复制这段内容后打开百度网盘手机App,操作更方便哦—来自百度网盘超级会员V1的分享

网络结构解析:

- Yolov3中,只有卷积层,通过调节卷积步长控制输出特征图的尺寸。所以对于输入图片尺寸没有特别限制。流程图中,输入图片以256*256作为样例。

- Yolov3借鉴了金字塔特征图思想,小尺寸特征图用于检测大尺寸物体,而大尺寸特征图检测小尺寸物体。特征图的输出维度为

![[公式]](https://www.zhihu.com/equation?tex=N%5Ctimes+N+%5Ctimes+%5B3+%5Ctimes+%284+%2B+1+%2B+80%29%5D) ,

, ![[公式]](https://www.zhihu.com/equation?tex=N%5Ctimes+N) 为输出特征图格点数,一共3个Anchor框,每个框有4维预测框数值

为输出特征图格点数,一共3个Anchor框,每个框有4维预测框数值 ![[公式]](https://www.zhihu.com/equation?tex=t_x+%2Ct_y+%2Ct_w%2C+t_h) ,1维预测框置信度,80维物体类别数。所以第一层特征图的输出维度为

,1维预测框置信度,80维物体类别数。所以第一层特征图的输出维度为 ![[公式]](https://www.zhihu.com/equation?tex=8+%5Ctimes+8+%5Ctimes+255) 。

。

- Yolov3总共输出3个特征图,第一个特征图下采样32倍,第二个特征图下采样16倍,第三个下采样8倍。输入图像经过Darknet-53(无全连接层),再经过Yoloblock生成的特征图被当作两用,第一用为经过33卷积层、11卷积之后生成特征图一,第二用为经过1*1卷积层加上采样层,与Darnet-53网络的中间层输出结果进行拼接,产生特征图二。同样的循环之后产生特征图三。

- concat操作与加和操作的区别:加和操作来源于ResNet思想,将输入的特征图,与输出特征图对应维度进行相加,即

![[公式]](https://www.zhihu.com/equation?tex=y+%3D+f%28x%29%2Bx) ;而concat操作源于DenseNet网络的设计思路,将特征图按照通道维度直接进行拼接,例如8816的特征图与8816的特征图拼接后生成8832的特征图。

;而concat操作源于DenseNet网络的设计思路,将特征图按照通道维度直接进行拼接,例如8816的特征图与8816的特征图拼接后生成8832的特征图。

- 上采样层(upsample):作用是将小尺寸特征图通过插值等方法,生成大尺寸图像。例如使用最近邻插值算法,将88的图像变换为1616。上采样层不改变特征图的通道数。

Yolo的整个网络,吸取了Resnet、Densenet、FPN的精髓,可以说是融合了目标检测当前业界最有效的全部技巧。

8.yolo预测框与各个点云聚类匹配

思路就是计算两者质心的距离,根据最小距离匹配出对应的预测框和点云

完整源码

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

| #include <vector>

#include <ctime>

#include <iostream>

#include <string>

#include<math.h>

#include <ros/ros.h>

#include <Eigen/Core>

#include <pcl/ModelCoefficients.h>

#include <pcl/point_types.h>

#include <pcl/io/pcd_io.h>

#include <pcl/filters/extract_indices.h>

#include <pcl/filters/voxel_grid.h>

#include <pcl/features/normal_3d.h>

#include <pcl/kdtree/kdtree.h>

#include <pcl/sample_consensus/method_types.h>

#include <pcl/sample_consensus/model_types.h>

#include <pcl/segmentation/sac_segmentation.h>

#include <pcl/segmentation/extract_clusters.h>

#include <pcl/filters/passthrough.h>

#include <pcl/point_cloud.h>

#include <pcl/visualization/pcl_visualizer.h>

#include <boost/thread/thread.hpp>

#include <pcl/console/parse.h>

#include <pcl/features/eigen.h>

#include <pcl/features/feature.h>

#include <pcl/features/normal_3d_omp.h>

#include <pcl/impl/point_types.hpp>

#include <pcl/features/boundary.h>

#include <pcl/visualization/cloud_viewer.h>

#include <pcl/filters/extract_indices.h>

#include <pcl/segmentation/progressive_morphological_filter.h>

#include <pcl/segmentation/sac_segmentation.h>

#include <pcl/common/centroid.h>

#include <pcl_conversions/pcl_conversions.h>

#include <sensor_msgs/PointCloud2.h>

#include <message_filters/sync_policies/approximate_time.h>

#include <message_filters/synchronizer.h>

#include <message_filters/subscriber.h>

#include <message_filters/time_synchronizer.h>

#include <darknet_ros_msgs/BoundingBoxes.h>

#include <darknet_ros_msgs/ObjectCount.h>

using namespace std;

using namespace sensor_msgs;

using namespace darknet_ros_msgs;

using namespace message_filters;

int objectnum;

struct Bounding

{

string obj;

int center_x;

int center_y;

};

struct Grasp

{

string name;

double x;

double y;

double angle;

};

vector<Bounding>objects;

vector<Grasp>grasps;

void BubbleSort(double arr[], int n)

{

for (int i = 0; i < n - 1; i++) {

for (int j = 0; j < n - i - 1; j++) {

if (arr[j] > arr[j + 1]) {

double temp = arr[j];

arr[j] = arr[j + 1];

arr[j + 1] = temp;

}

}

}

}

void msgtrans(const BoundingBoxes::ConstPtr &data)

{

objects.clear();

Bounding b;

for(int i=0;i<objectnum;i++)

{

b.obj=data->bounding_boxes[i].Class;

b.center_x=round(((data->bounding_boxes[i].xmin)+(data->bounding_boxes[i].xmax))/2);

b.center_y=round(((data->bounding_boxes[i].ymin)+(data->bounding_boxes[i].ymax))/2);

objects.push_back(b);

}

}

void yolomatchpcl(vector<Bounding>&a,vector<vector<double> >&b)

{

grasps.clear();

int i,distance,minid=0;

int mindistance=10000;

Grasp g;

while(grasps.size()<a.size())

{

for(int j=0;j<a.size();j++)

{

distance=(a[i].center_x-b[j][0])*(a[i].center_x-b[j][0])+(a[i].center_y-b[j][1])*(a[i].center_y-b[j][1]);

if(distance<=mindistance)

{

mindistance=distance;

minid=j;

}

}

cout<<mindistance<<endl;

g.name=a[i].obj;

g.x=b[minid][0];

g.y=b[minid][1];

g.angle=b[minid][2];

grasps.push_back(g);

minid=0;

mindistance=10000;

++i;

}

cout<<"匹配完成"<<endl;

}

int xtopixel(double x)

{

int ypixel=round((0.4-x)*480/0.475+240);

return ypixel;

}

int ytopixel(double y)

{

int xpixel=round(-y*640/0.633+320);

return xpixel;

}

void callback(const PointCloud2::ConstPtr &msg1,

const BoundingBoxes::ConstPtr &msg2,const ObjectCount::ConstPtr &msg3) {

pcl::PointCloud<pcl::PointXYZ>::Ptr cloud (new pcl::PointCloud<pcl::PointXYZ>);

pcl::PointCloud<pcl::PointXYZ>::Ptr cloudf (new pcl::PointCloud<pcl::PointXYZ>);

objectnum=msg3->count;

pcl::fromROSMsg(*msg1, *cloud);

msgtrans(msg2);

cout << " Cloud before filtering: " << cloud->points.size() << endl;

pcl::PassThrough<pcl::PointXYZ> pass;

pass.setInputCloud(cloud);

pass.setFilterFieldName("z");

pass.setFilterLimits(0.49, 0.52);

pass.setNegative(false);

pass.filter(*cloudf);

std::cout << "Cloud after filtering: " << cloudf->points.size() << endl;

std::cout << "PointCloud before sampling has: " << cloudf->points.size () << " data points." << std::endl;

pcl::VoxelGrid<pcl::PointXYZ> vg;

pcl::PointCloud<pcl::PointXYZ>::Ptr cloud_filtered (new pcl::PointCloud<pcl::PointXYZ>);

vg.setInputCloud (cloudf);

vg.setLeafSize (0.005f, 0.005f, 0.005f);

vg.filter (*cloud_filtered);

std::cout << "PointCloud after sampling has: " << cloud_filtered->points.size () << " data points." << std::endl;

pcl::search::KdTree<pcl::PointXYZ>::Ptr tree (new pcl::search::KdTree<pcl::PointXYZ>);

tree->setInputCloud (cloud_filtered);

std::vector<pcl::PointIndices> cluster_indices;

pcl::EuclideanClusterExtraction<pcl::PointXYZ> ec;

ec.setClusterTolerance (0.015);

ec.setMinClusterSize (100);

ec.setMaxClusterSize (25000);

ec.setSearchMethod (tree);

ec.setInputCloud (cloud_filtered);

ec.extract (cluster_indices);

int j = 0;

vector<vector<double> > out;

for (std::vector<pcl::PointIndices>::const_iterator it = cluster_indices.begin (); it != cluster_indices.end (); ++it)

{

pcl::PointCloud<pcl::PointXYZ>::Ptr cloud_cluster (new pcl::PointCloud<pcl::PointXYZ>);

for (std::vector<int>::const_iterator pit = it->indices.begin (); pit != it->indices.end (); ++pit)

cloud_cluster->points.push_back (cloud_filtered->points[*pit]);

cloud_cluster->width = cloud_cluster->points.size ();

cloud_cluster->height = 1;

cloud_cluster->is_dense = true;

std::cout << "PointCloud representing the Cluster: " << cloud_cluster->points.size () << " data points." << std::endl;

pcl::PointCloud<pcl::Normal>::Ptr normals(new pcl::PointCloud<pcl::Normal>);

pcl::PointCloud<pcl::Boundary> boundaries;

pcl::BoundaryEstimation<pcl::PointXYZ, pcl::Normal, pcl::Boundary> est;

pcl::search::KdTree<pcl::PointXYZ>::Ptr tree2(new pcl::search::KdTree<pcl::PointXYZ>());

pcl::NormalEstimationOMP<pcl::PointXYZ, pcl::Normal> normEst;

normEst.setNumberOfThreads(12);

normEst.setInputCloud(cloud_cluster);

normEst.setSearchMethod(tree2);

normEst.setRadiusSearch(0.02);

normEst.compute(*normals);

est.setInputCloud(cloud_cluster);

est.setInputNormals(normals);

est.setSearchMethod(tree2);

est.setKSearch(500);

est.compute(boundaries);

pcl::PointCloud<pcl::PointXYZ>::Ptr boundPoints(new pcl::PointCloud<pcl::PointXYZ>);

pcl::PointCloud<pcl::PointXYZ> noBoundPoints;

int countBoundaries = 0;

for (int i = 0; i<cloud_cluster->size(); i++)

{

uint8_t x = (boundaries.points[i].boundary_point);

int a = static_cast<int>(x);

if (a == 1)

{

(*boundPoints).push_back(cloud_cluster->points[i]);

countBoundaries++;

}

else

noBoundPoints.push_back(cloud_cluster->points[i]);

}

std::cout << "boundary "<<j<<" size is:" << countBoundaries << std::endl;

double list_dir[2];

double list_pre_dir[4];

Eigen::Vector4f centroid;

pcl::compute3DCentroid(*boundPoints, centroid);

pcl::ModelCoefficients::Ptr coefficients(new pcl::ModelCoefficients);

pcl::PointIndices::Ptr inliers(new pcl::PointIndices);

pcl::SACSegmentation<pcl::PointXYZ> seg2;

seg2.setOptimizeCoefficients(true);

seg2.setModelType(pcl::SACMODEL_LINE);

seg2.setMethodType(pcl::SAC_RANSAC);

seg2.setDistanceThreshold(0.001);

for(int i=0;i<4;i++)

{

pcl::PointCloud<pcl::PointXYZ>::Ptr cloud_line(new pcl::PointCloud<pcl::PointXYZ>);

pcl::PointCloud<pcl::PointXYZ>::Ptr cloud_out_line(new pcl::PointCloud<pcl::PointXYZ>);

seg2.setInputCloud(boundPoints);

seg2.segment(*inliers, *coefficients);

list_pre_dir[i]=coefficients->values[4]/coefficients->values[3];

pcl::ExtractIndices<pcl::PointXYZ> extract;

extract.setInputCloud (boundPoints);

extract.setIndices (inliers);

extract.setNegative (false);

extract.filter (*cloud_line);

std::cout << "PointCloud"<<i<< "representing the line component: " << cloud_line->points.size () << " data points." << std::endl;

extract.setNegative (true);

extract.filter (*cloud_out_line);

*boundPoints = *cloud_out_line;

}

BubbleSort(list_pre_dir,4);

list_dir[0]=(list_pre_dir[0]+list_pre_dir[1])/2;

list_dir[1]=(list_pre_dir[2]+list_pre_dir[3])/2;

double angle_y=1.57-atan(list_dir[1]);

std::cout << "Z轴旋转角为:" << angle_y<< endl;

vector<double>out_element;

out_element.push_back(ytopixel(-centroid[0]));

out_element.push_back(xtopixel(0.4-centroid[1]));

out_element.push_back(angle_y);

out.push_back(out_element);

cout <<out.size()<<endl;

j++;

}

yolomatchpcl(objects,out);

for(int k=0;k<out.size();k++)

{

cout <<grasps[k].name<<" 质心是("

<< grasps[k].x << ","

<< grasps[k].y <<")"

<<"旋转角为"<<grasps[k].angle<<"." << endl;

}

out.clear();

cout<<"copy that"<<endl;

}

int main(int argc, char** argv)

{

ros::init(argc, argv, "pclproc");

ros::NodeHandle nh;

message_filters::Subscriber<PointCloud2> pcl_sub(nh, "/camera/depth/points", 1);

message_filters::Subscriber<BoundingBoxes> yolo_sub(nh, "/darknet_ros/bounding_boxes", 1);

message_filters::Subscriber<ObjectCount> count_sub(nh, "/darknet_ros/found_object", 1);

typedef sync_policies::ApproximateTime<PointCloud2,

BoundingBoxes,ObjectCount>

syncPolicy;

Synchronizer<syncPolicy> sync(syncPolicy(10), pcl_sub, yolo_sub,count_sub);

sync.registerCallback(boost::bind(&callback, _1, _2,_3));

ros::spin();

return 0;

}

|

效果演示

在构思好位置信息的发布形式后,食品分类和工件装配将同步进行,预期通过某种时序控制完成笛卡尔规划与双臂运动

,

为输出特征图格点数,一共3个Anchor框,每个框有4维预测框数值

,1维预测框置信度,80维物体类别数。所以第一层特征图的输出维度为

。

;而concat操作源于DenseNet网络的设计思路,将特征图按照通道维度直接进行拼接,例如8816的特征图与8816的特征图拼接后生成8832的特征图。